Play around with simple Machine Learning to advanced Deep Learning algorithms.

These simulations runs in your browser.

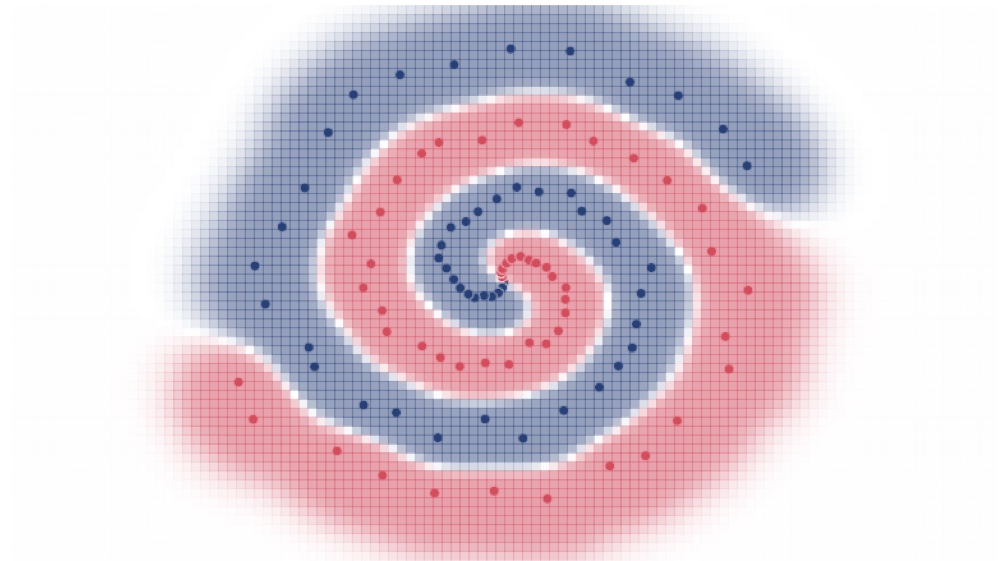

Deep Learning is a subset of Machine Learning. ML algorithms are designed to perform a given tasks without providing explicit instructions. A few types of these algorithms are Linear Regressions, Random Forest, Decision Trees, Support Vector Machines. Deep Learning, specifically, use Artificial Neural Networks as the architecture for their algorithms.

Machine revolution began back in 1940 and has since been called by many names. Deep Learning had a resurgence when Alex Krizhevsky released his monumental algorithm AlexNet on 10 September, 2012.

Artificial Neural Network (ANNs) are also called Connectionist Systems and are loosely inspired by the biological brain.

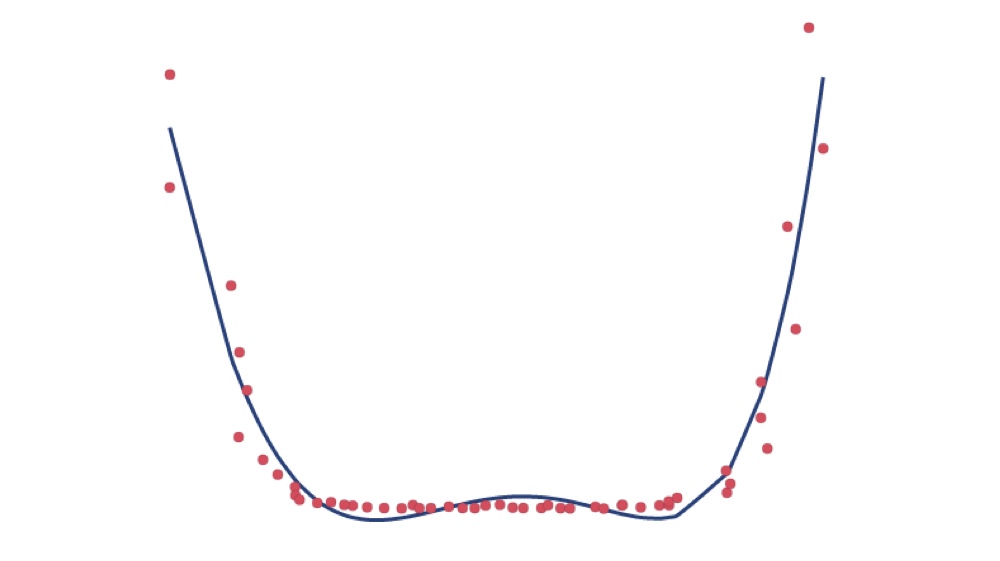

To make an ANN, a computer program is used to create virtual neurons or nodes. These nodes are connected with together. The connections serve the purpose of information or data transmission between these nodes. Each node is initially assigned a random value. This value is called weight. Each node is capable of performing simple algebraic operations. The algebraic operation uses weight and data received from the previous nodes.

Universal Approximation Theorem states that by modifying the weights of the nodes, ANNs are capable of learning a relationship between input and output, if it exists.

This is dependent on the task required to be performed. AlexNet was designed to recognize objects in the images (Computer Vision). In this case the input was images and the output was the name of the object. The AlexNet ANN was able to approximate the functions required to identify patterns of different objects.

At Yobee, the objective is to decode the complexities of financial markets. In this scenario, the input is data such as GDP, currency exchange rates, stock prices and the output is the expected movement of the market.

GPT (Generative Pre-trained Transformer) is a type of Neural Network architecture that has revolutionized Natural Language Processing. Unlike previous Deep Learning models, GPTs are based on the Transformer architecture introduced by Vaswani et al. in their seminal paper "Attention Is All You Need" published on June 12, 2017.

Transformers differ from previous architectures by utilizing a mechanism called "self-attention" which allows the model to weigh the importance of different words in a sentence regardless of their positional distance. This breakthrough enabled models to better understand context and relationships within text, leading to significant improvements in language tasks.

The first GPT model was introduced by OpenAI in June 2018. Since then, subsequent iterations (GPT-2, GPT-3, GPT-4) have continuously pushed the boundaries of what's possible in language understanding and generation. These models are trained on vast amounts of text data and can perform a wide range of tasks from text completion to translation, summarization, and even code generation without being explicitly programmed for each task.

90% code generated by LLMs

🤖

100% code curated by humans

🗿